Locating Visual Jokes in Homestuck with Rudimentary Computer Vision

Table of Contents

- Locating Visual Jokes in Homestuck with Rudimentary Computer Vision

- Abstract

- Introduction

- What, Specifically, Do We Want?

- Assembling the Compendium

- Establishing a Baseline with Hamming Distance Of Binary Images

- Edge Detection

- Perceptual Hashing

- Clustering

- The Curse of Dimensionality

- An Edge-Hash Mixed Metric

- The Full Comic using K-Means Clustering

- A Different Representation

- Graph Theory

- Wait, where are their arms?

- Limitations, Space for Improvement, Takeaways

- Appendix A - Cool Clusters

Locating Visual Jokes in Homestuck with Rudimentary Computer Vision

Draft: v1.0.0 | Posted: 12/30/2018 | Updated: 02/09/2018 | confidence of success: 80% | estimated time to completion: 12/28/2018 | importance: Medium

Abstract

I use basic computer vision to identify which panels in popular webcomic Homestuck are visually similar to each other, in order to find examples of reused art / callbacks to other panels. I scrape the webcomic for it's images and apply a number of strategies in order to find one which works. Writeup may contain light spoilers for the webcomic.

Introduction

In preparation for a much larger project, I came across the following need: I would like to give examples of visually similar images in Andrew Hussie's webcomic Homestuck. Hussie reuses drawings frequently, and often repurposes previously drawn panels to easily produce new ones involving different characters (i.e. both characters looking at their hands, from the same angle, using an identical drawing but with one character's skin drawn a different color). Hussie uses this repurposing as a means of introducing visual jokes to his webcomic, frequently calling back to other panels, and then calling back to the callbacks. I'd love to be able to point to a number of these reused panels, but no crowdsourced fanmade database of visually similar images in Homestuck has been created (darn), and since Homestuck has >8000 panels combing through these by hand in O(n2) to dig them up myself sounds like something I would rather not do.

However, I really wanted those examples of visually similar panels, so I racked my brain about how I could accomplish this until I briefly remembered taking an intro computer vision course in college. The art in Homestuck is pretty simple, so I set out to see what I could do with elementary tools.

If you'd like to play along, the org document for this writeup contains all the code used for this project, and everything used in it can be found on GitHub. I'm going to loosely take a reproducible research approach here: all the code is written in python and is (hopefully) fairly easy to follow. I think in general Literate Programming seems like a bit of a hassle but I figured for a short project it would be a good exercise to try and keep everything organized enough to not feel embarrassed about sharing it.

Likewise, a large portion of this writeup is my multiple attempts at building something that works well. I try a number of different methods in this writeup, a bunch of which don't end up working that well. If you're into the process, great! If you just want to see some cool similar images in the comic feel free to skip to Appendix A where I'll post a number of them.

What, Specifically, Do We Want?

As a brief aside, I'll explain what exactly a "win condition" for this project entails.

Below are two images from two separate panels in Homestuck: 2079 and 23381

These two images are over 250 pages apart and used for entirely different things, yet they're obvious recolors of the same drawing. This sort of keyframe reuse is really common in Homestuck (and media in general), but Homestuck's themes of recursion/self-reference and also the sheer magnitude of the webcomic allows for these frames to acquire a sort of meaning unto themselves.

This is nicely illustrated on panel 2488.2

This image is hilarious.

This is a direct callback to the previously reused drawing, despite being an entirely different drawing - the hands are more realistic human hands rather than the stubby hands in the previous two images. The hands are drawn with no border, with the ring and little fingers drawn together, to give the appearance of four fingers (as in the previous drawings) instead of five.3 There's nothing inherently funny about this drawing on its own, but since we've seen a similar image repeated multiple times before, it becomes a motif which can then be riffed off of.

"Repetition serves as a handprint of human intent"

YOU (YOU) THINK THE ABOVE GRAPHIC LOOKS FAMILIAR. YOU THINK YOU HAVE SEEN THIS GREAT DRAWING BEFORE, IN A DIFFERENT CONTEXT. YOU SEE. THIS IS WHAT MASTER STORY TELLERS REFER TO AS A "VISUAL CALLBACK". TRULY EXCEPTIONAL PROFESSIONALS OFTEN WILL TAKE AN OLD DRAWING THEY DID. THAT SHOWS A SIMILAR SITUATION. AND DO A COUPLE OF HALF ASSED THINGS. LIKE CHANGE THE COLORS. TO MAKE IT SLIGHTLY DIFFERENT.

— Caliborn, Homestuck page 6265

Homestuck is full of these, and I would like to find as many as possible.

Assembling the Compendium

Grabbing the non-flash images in Homestuck is straightforward enough. it can be done with relatively few lines of code thanks to our good friends Requests and Beautiful Soup 4. The code to grab all the image files in the webcomic can be found below.

import requests, bs4 firstp = 1 lastp = 10 #lastp = 8130 imglist = [] for x in range(firstp, lastp+1): url = "https://www.homestuck.com/story/" + str(x) try: page = requests.get(url) page.raise_for_status() except requests.exceptions.RequestException: continue #some numbers are missing from 1-8130, if the link 404s skip it soup = bs4.BeautifulSoup(page.text) images = soup.find_all('img', class_="mar-x-auto disp-bl") for count, image in enumerate(images, 1): imgurl = image['src'] if imgurl[0] == '/': imgurl = "https://www.homestuck.com" + imgurl #handle local reference response = requests.get(imgurl) if response.status_code == 200: with open("./screens/img/" + str(x) + "_" + str(count) + "." + imgurl.split(".")[-1], 'wb') as f: f.write(response.content) #format panelnumber_imagecount.format saves all

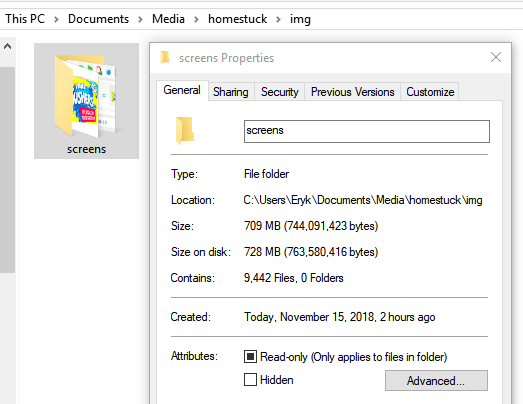

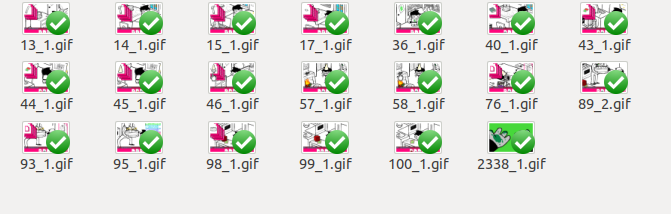

This assembles us a corpus of 9,442 images, mostly gifs. This is a pretty decent corpus, as far as datasets for images go, especially considering most images are gifs which contain multiple frames. It's pretty crazy how large this webcomic is, when you have it all in one folder like this. Just the images alone are more than 700MB.

I won't bother with the flashes for now - although they're certainly an important part of the comic and well worth a closer look later, there's well over three hours of flashes and extracting every frame of every flash does not sound fun or necessary for this project for now.

Establishing a Baseline with Hamming Distance Of Binary Images

A really basic thing we can start with is taking a black-and-white conversion of the images in the dataset and calculating the Hamming Distance between them. I have a feeling this won't work particularly well, but it will be useful as a metric of comparison between this and other metrics (plus it should be fairly easy to implement).

We begin with a toy dataset of ten images, which I selected by hand to give a good representative example: The images roughly fall into four groups: [Jade + Robot Jade], [Jade, John, and Terezi at computers], [yellow, green, human hands], [two random images]. Likewise, we will only bother looking at the first frame in these images, despite the fact that they are gifs. As with the flashes, it's not that it would be too difficult to do this (merely splitting the gifs into each frame + instructing the program to ignore frames within the same gif for comparisons would be easy enough), but it would just be a bit more trouble than I think it's worth for now.

Ideally the images in these groups should resemble each other more than they resemble the other images, with the two random images as control. The images that are more direct art recycles should be more similar to each other than they are to merely-similar images (e.g. the images of John and Jade should resemble each other more than they do to Terezi, since John and Jade are in the same spot on the screen and Terezi is translated in the frame).

We can start by converting every image to a binary image consisting of only black and white pixels.

#Convert all images to binary image from PIL import Image import os for image in os.listdir('./screens/img/'): img_orig = Image.open("./screens/img/" + image) img_new = img_orig.convert('1') dir_save = './screens/binary/' + image img_new.save(dir_save)

This will allow us to compare each image with a simple pixel-by-pixel comparison and count the number of pixels where the two images differ. While this is very straightforward, it sort of leaves us at the mercy of what colors are used in the panel, so the conversion isn't perfect.

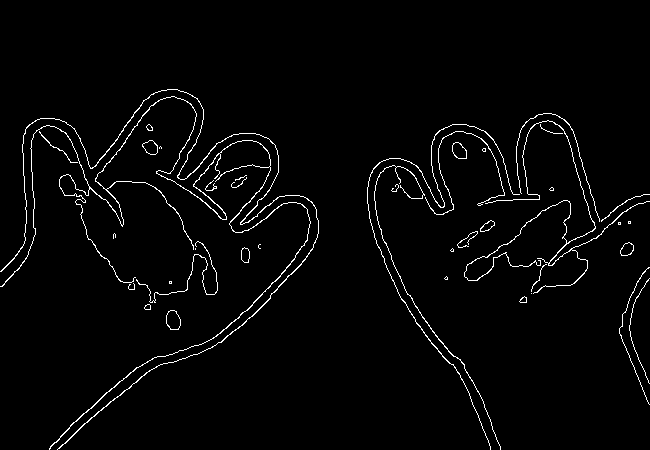

For example, we have the two hands panels converted to binary images. Here we see that the backgrounds are assigned different colors, as well as the blood being completely eliminated in the first image but not the second.

There's also some issues with objects blending into the background, which could cause issues as well.

This method will likely work extremely well for detecting duplicate images (since they will produce the same binary image) but leave something to be desired for redraws (which have flaws like the two mentioned above).

Anyways, lets give it a shot4.

import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized

<<hamming-functions>> full_list = hamming_a_directory('./screens/binary/') full_list.sort(key=lambda x: int(x[2])) return full_list[:10]

| 15251.gif | 15252.gif | 2179 |

| 20792.gif | 23381.gif | 2680 |

| 10331.gif | 15301.gif | 2691 |

| 24881.gif | 20792.gif | 2695 |

| 18701.gif | 10331.gif | 2917 |

| 15252.gif | 15301.gif | 3204 |

| 10341.gif | 15252.gif | 3240 |

| 18701.gif | 15301.gif | 3242 |

| 10341.gif | 15301.gif | 3330 |

| 23381.gif | 15301.gif | 3539 |

A surprisingly solid baseline! Here we can see that the most similar images with this method are 15251 and 15252 (John and Jade), which are redraws of each other. Likewise, it catches the similarity between 20792 and 23381 (the two hands) as well as comparing 20792 and 24881 (one of the hands + the human gag version).

There are some misses, though – 1530 is considered similar to 1033 despite the two panels being largely unrelated, which I suspect is largely because of the background for both images being solid black. Likewise, it misses the comparison between 10331 and 10341, and doesn't compare panels 23381 and 24881 despite favorably comparing both of those panels to 20792.

So it's clear we can use this to compare images to find similarities, but lets see if we can't get something slightly better.

Edge Detection

Edge Detection is a class of tools in computer vision that mathematically determine points where an image has changes in brightness (i.e. edges). This is actually quite a bit more difficult than it seems, since images typically have gradients and non-uniform changes in brightness which make finding the edges in images trickier than it seems.

That said, the nice thing about line art is that it involves, well, lines, and it seems really probable that edge detection will produce a solid result at extracting the outlines of drawn images. I'm pretty confident that this will yield us some good images so let's try and build it. We will be implementing Canny edge detection which applies a five-step process to the image:

- Apply Gaussian Blur (to reduce noise)

- Find intensity gradients (to find horizontal/vertical/diagonal edges)

- Apply non-maximum suppression (set all parts of the blurred edges to 0 except the local maxima)

- Apply double threshold (split detected edges into "strong", "weak", and "suppressed" based on gradient value)

- Track edges by hysteresis (remove weak edges that aren't near strong edges, usually due to noise)

This is even more straightforward to implement in Python, because OpenCV / Pillow has built-in support for it already, making this possible without actively writing each step!

import cv2 as cv import os from PIL import Image folder = "./screens/img/" target = "./screens/canny/" for image in os.listdir(folder): if not os.path.isfile(image): continue imgdir = folder + image #gif -> png for opencv compatability im_gif = Image.open(imgdir) saveto = target + image.split(".")[0] + ".png" im_gif.save(saveto) #Canny Edge Detection, overwrite png img_orig = cv.imread(saveto, 1) edges = cv.Canny(img_orig,100,200) img_new = Image.fromarray(edges) img_new.save(saveto)

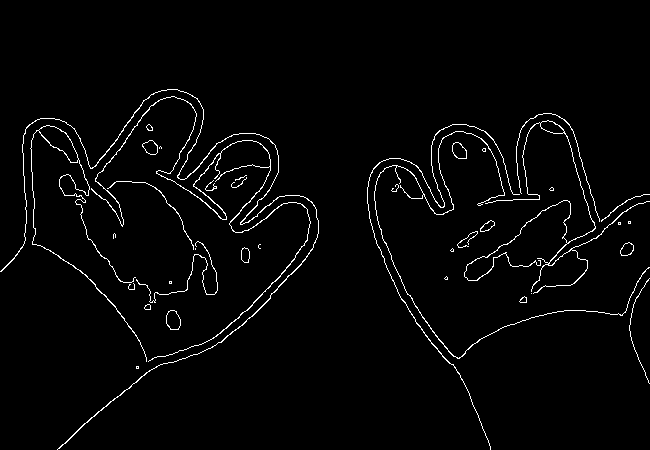

Here's what we end up with:

Wow, this turns out great!

We don't get amazing results on every frame, and some of the frames with busier backgrounds suffer a bit from this, like this one:

But I think the result extracts the edges with enough precision that it's functional enough for now.

import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized full_list = hamming_a_directory('./screens/canny/') full_list.sort(key=lambda x: int(x[2])) return full_list[:10]

| 23381.png | 20792.png | 31 |

| 10331.png | 10341.png | 224 |

| 23381.png | 10331.png | 458 |

| 10331.png | 20792.png | 461 |

| 18701.png | 20792.png | 479 |

| 23381.png | 18701.png | 480 |

| 10331.png | 18701.png | 480 |

| 23381.png | 10341.png | 514 |

| 20792.png | 10341.png | 519 |

| 23381.png | 24881.png | 522 |

The results for this hamming distance are somewhat disappointing: it's really accurate at detecting colorswaps - the hands and the two images of Jade receive appropriately low scores. But it's not so great at detecting reused outlines (the images of Jade and John no longer even crack the top 10 despite being the most similar by binary image hamming distance).

Perceptual Hashing

Hash functions are functions that can map data of an arbitrary size down to data of a fixed size. Usually these take the form of cryptographic hash functions, which are good for sensitive data because they have high dispersion (they change a lot when the input is changed even a little bit), so its not very useful for working backwards and determining what created the hash. Perceptual Hashing, on the other hand, maps data onto hashes while maintaining a correlation between the source and the hash. If two things are similar, their hashes will be similar with perceptual hashing, which is a useful mechanism for locating similar images (TinEye allegedly uses this for Reverse Image Searching).

Hackerfactor has a semi-famous blogpost from 2011 about perceptual hashing algorithms, in which he describes average hashing and pHash - two straightforward and very powerful versions of idea. Average hashing in particular is very easy to grasp:

- squish the image down to 8x8 pixels

- convert to greyscale

- average colors

- set every pixel to 1 or 0 depending on whether it is greater/worse than the average

- turn this binary string into a 64-bit integer. Then, like with our other attempts, you can use hamming distance to compare two images.

Let's give it a whirl.

import cv2 as cv import os import numpy as np import PIL from PIL import Image <<hamming-functions>> folder = "./screens/img/" target = "./screens/phash/" for image in os.listdir(folder): imgdir = folder + image #resize to 8x8 im_gif = Image.open(imgdir) im_gif = im_gif.resize((8,8)) saveto = target + image.split(".")[0] + ".png" im_gif.save(saveto) #convert to greyscale im_gif = Image.open(saveto).convert('L') im_gif.save(saveto) #for each pixel, assign 0 or 1 if above or below mean quantized_img = Image.fromarray(np.reshape(quantize(list(im_gif.getdata())), (8, 8)).astype('uint8')) quantized_img.save(saveto)

Just a recap of all the steps:

8x8 image (shown here and also enlarged)

convert to greyscale

quantize based on mean value

find hamming distances between images

<<hamming-functions>> full_list = hamming_a_directory('./screens/phash/', False) full_list.sort(key=lambda x: int(x[2])) return full_list[:10]

| 23381.png | 20792.png | 17 |

| 10331.png | 18701.png | 17 |

| 10331.png | 10341.png | 20 |

| 15251.png | 15301.png | 23 |

| 15252.png | 15301.png | 23 |

| 24881.png | 20792.png | 23 |

| 15251.png | 15252.png | 24 |

| 23381.png | 10331.png | 24 |

| 15251.png | 20792.png | 25 |

| 15252.png | 20792.png | 25 |

I'm a little unsure what to make of this. On the one hand, it gets almost every single match I wanted. The two hands are the closest, it catches all three of the sitting-at-computer images, it catches the two jades, it seems pretty good.

But I remain perplexed about why 1033 is so insistent on matching up with completely random images. Between edge detection and perceptual hashing within the context of our 10-image set, since the former seems less likely to produce false positives but the latter seems better.

Another variant of this idea is pHash, which uses discrete cosine transform (DCT) in place of a simple average. OpenCV has a module for this so I won't bother coding it from scratch.

import cv2 as cv import os import numpy as np import PIL from PIL import Image folder = "./screens/img/" target = "./screens/phash/" for image in os.listdir(folder): imgdir = folder + image #gif -> png for opencv compatability im_gif = Image.open(imgdir) saveto = target + image.split(".")[0] + ".png" im_gif.save(saveto) #Perceptual Hashing, overwrite png img_orig = cv.imread(saveto, 1) img_hash = cv.img_hash.pHash(img_orig)[0] bin_hash = map(lambda x: bin(x)[2:].rjust(8, '0'), img_hash) split_hash = [] for x in bin_hash: row = [] for y in x: row.append(int(y)*255) split_hash.append(row) img_new = Image.fromarray(np.array(split_hash).astype('uint8')) img_new.save(saveto)

<<hamming-functions>> full_list = hamming_a_directory('./screens/phash/', False) full_list.sort(key=lambda x: int(x[2])) return full_list[:10]

| 15251.png | 15252.png | 17 |

| 24881.png | 20792.png | 19 |

| 10331.png | 18701.png | 22 |

| 15301.png | 10331.png | 23 |

| 23381.png | 24881.png | 23 |

| 15252.png | 24881.png | 24 |

| 24881.png | 18701.png | 24 |

| 15301.png | 24881.png | 25 |

| 18701.png | 20792.png | 25 |

| 15251.png | 18282.png | 26 |

No dice, this is even worse than average hashing.

Alright, as a last ditch attempt, let's try running this on the canny edge-detected images instead of the actual source images.

import cv2 as cv import os import numpy as np import PIL from PIL import Image <<hamming-functions>> folder = "./screens/canny/" target = "./screens/phash/" for image in os.listdir(folder): imgdir = folder + image #resize to 8x8 im_gif = Image.open(imgdir) im_gif = im_gif.resize((8,8)) saveto = target + image.split(".")[0] + ".png" im_gif.save(saveto) #convert to greyscale im_gif = Image.open(saveto).convert('L') im_gif.save(saveto) #for each pixel, assign 0 or 1 if above or below mean quantized_img = Image.fromarray(np.reshape(quantize(list(im_gif.getdata())), (8, 8)).astype('uint8')) quantized_img.save(saveto)

<<hamming-functions>> import numpy as np full_list = hamming_a_directory('./screens/phash/', False) full_list.sort(key=lambda x: int(x[2])) return full_list[:10]

| 23381.png | 20792.png | 0 |

| 15251.png | 15301.png | 2 |

| 15251.png | 23381.png | 2 |

| 15251.png | 18701.png | 2 |

| 15251.png | 20792.png | 2 |

| 15301.png | 23381.png | 2 |

| 15301.png | 18701.png | 2 |

| 15301.png | 20792.png | 2 |

| 23381.png | 18701.png | 2 |

| 23381.png | 10341.png | 2 |

Again, no dice; all of the images are far too similar to create substantially different hashes, which means the list of false matches is extraordinarily high.

Clustering

We take a brief pause here to ponder the following question: how are we going to pull out clusters of related images in a sea of comparisons? It's a bit weird of a problem, since there's no validation set, an unknown number of clusters, and an undefined/large quantity of "clusters" with cluster size 1 (i.e. unique panels).

The first attempt at a solution I think I'm going to take here is a very very simple one, keeping with the general idea of this being a relatively beginner take on the problem. We're going to take a two-step approach to pulling out the clusters.

Pruning

First, we are going to filter the images by ones that appear to be present in at least one cluster. Doing this is pretty straightforward - we can just calculate the mean and standard deviation of each panel and filter out images that are sufficiently far away from the average panel. This will allow us to only cluster data that actually can be clustered meaningfully, since after doing this we can just ignore unique panels.

import numpy as np import os from PIL import Image import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized def nxn_grid_from_itertools_combo(panels, full_list): # Create nxn grid such that x,y is a comparison between panel x and panel y # this is the format that you'd get if you did every comparison but we used itertools # be more efficient. Now that we need these comparisons in a matrix we need to convert it. grid = [] for image1 in panels: compare_img1 = [] for image2 in panels: if image1 == image2: compare_img1.append(0) else: val = [x[2] for x in full_list if ((x[0] == image1 and x[1] == image2) or \ (x[0] == image2 and x[1] == image2))] if val: compare_img1.append(val[0]) else: compare_img1.append(grid[panels.index(image2)][panels.index(image1)]) grid.append(compare_img1) return grid def filter_out_nonduplicates(directory, resize=True): ## Perform comparisons without duplicates full_list = hamming_a_directory(directory, resize) ## convert comparisons to an nxn grid, as if we had duplicates # Create list of panels panels = os.listdir(directory) # Create nxn grid such that x,y is a comparison between panel x and panel y nxn_grid = nxn_grid_from_itertools_combo(panels, full_list) # find mu and sigma of each panel compared to each other panel, filter out probable matches return filter_grid(nxn_grid) def filter_grid(grid): gmean = np.mean(grid) gstd = np.std(grid) filtered_grid = [] for i, panel in enumerate(grid): for x, comparison in enumerate(panel): flag = False if i != x: if comparison < (gmean-gstd): flag = True break if flag == True: filtered_grid.append(panel) return filtered_grid def move_directory(imgsrc, directory, filename): if not os.path.exists(directory): os.makedirs(directory) try: newfile = Image.open(imgsrc+filename) newfile.save(directory+filename) except: newfile = Image.open(imgsrc+filename[:-3]+"gif") newfile.save(directory+filename[:-3]+"gif")

import numpy as np import os from PIL import Image import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized def nxn_grid_from_itertools_combo(panels, full_list): # Create nxn grid such that x,y is a comparison between panel x and panel y # this is the format that you'd get if you did every comparison but we used itertools # be more efficient. Now that we need these comparisons in a matrix we need to convert it. grid = [] for image1 in panels: compare_img1 = [] for image2 in panels: if image1 == image2: compare_img1.append(0) else: val = [x[2] for x in full_list if ((x[0] == image1 and x[1] == image2) or \ (x[0] == image2 and x[1] == image2))] if val: compare_img1.append(val[0]) else: compare_img1.append(grid[panels.index(image2)][panels.index(image1)]) grid.append(compare_img1) return grid def filter_out_nonduplicates(directory, resize=True): ## Perform comparisons without duplicates full_list = hamming_a_directory(directory, resize) ## convert comparisons to an nxn grid, as if we had duplicates # Create list of panels panels = os.listdir(directory) # Create nxn grid such that x,y is a comparison between panel x and panel y nxn_grid = nxn_grid_from_itertools_combo(panels, full_list) # find mu and sigma of each panel compared to each other panel, filter out probable matches return filter_grid(nxn_grid) def filter_grid(grid): gmean = np.mean(grid) gstd = np.std(grid) filtered_grid = [] for i, panel in enumerate(grid): for x, comparison in enumerate(panel): flag = False if i != x: if comparison < (gmean-gstd): flag = True break if flag == True: filtered_grid.append(panel) return filtered_grid def move_directory(imgsrc, directory, filename): if not os.path.exists(directory): os.makedirs(directory) try: newfile = Image.open(imgsrc+filename) newfile.save(directory+filename) except: newfile = Image.open(imgsrc+filename[:-3]+"gif") newfile.save(directory+filename[:-3]+"gif") return filter_out_nonduplicates('./screens/canny/')[0]

A Brief Overview of K-Means Clustering

Then, we can apply a variation on k-means clustering to pull apart these values. This is probably not the most efficient way to do it, but it's pretty cool!

K-means clustering works via a four step process:

- Initialize k random points in n-dimensional space, usually points in the dataset

- Group all data points to the closest point

- When all points are grouped, calculate the mean of everything assigned to that point

- If the grouping of points changed, repeat step 2 with the new mean in place of the old K. If they stayed the same, return the clustering and stop.

This is intuitive for clustering things relative to variables, but it’s not immediately obvious how we can apply it to our images.

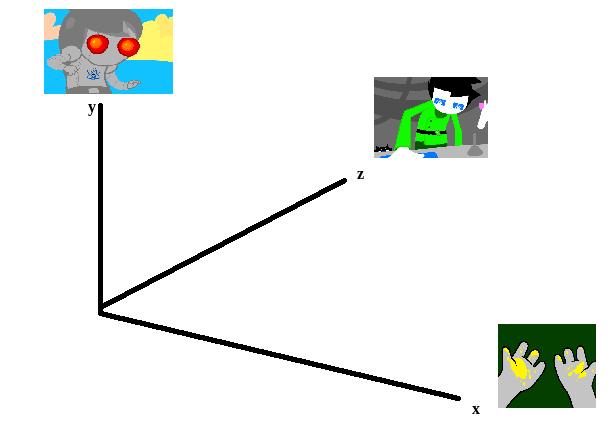

To illustrate what we will be doing, imagine a 2D plane with the x-axis representing “distance to panel A” and the y-axis representing “distance to panel B”

So if we take any random panel and use the hamming distance, you can represent this image in the “space” of these two panels. Proximity to 0 represents similarity, distance from 0 represents dissimilarity. So using panel A would yield something like (0, 15000) since panel A == itself, and likewise using panel B would yield something like (15000, 0). If you introduced panel C, which is a redraw of panel A, you might expect a value like (800, 15000). If we were only trying to cluster our images based on these two panels, the k-means solution makes perfect sense.

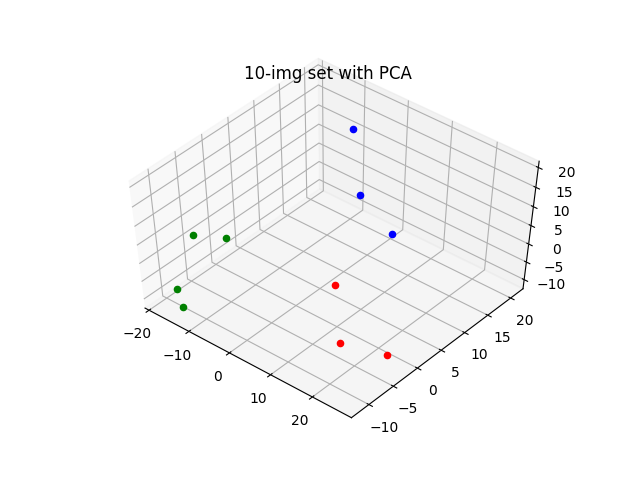

So you can imagine a third panel being considered as a z-axis, which turns this into a 3d space. It’s in three dimensions now, but the basic idea is still the same, and k-means solution still makes sense (just using three random values per point instead of 2).

We extend this from 3-dimensional space to n-dimensional space, which is harder to represent visually but is the same structurally as before — you can represent an image by its distance to every other image in the set, and you can initialize a point in this n-dimensional space by generating a list of n random numbers: [distance from panel1, distance from panel2, distance from panel3, … , distance from paneln ].

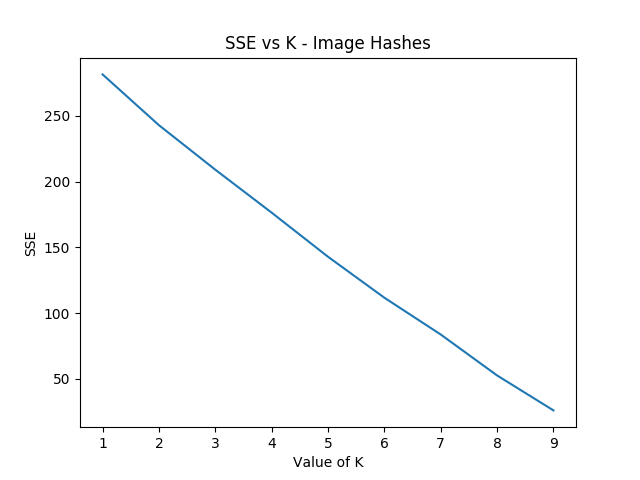

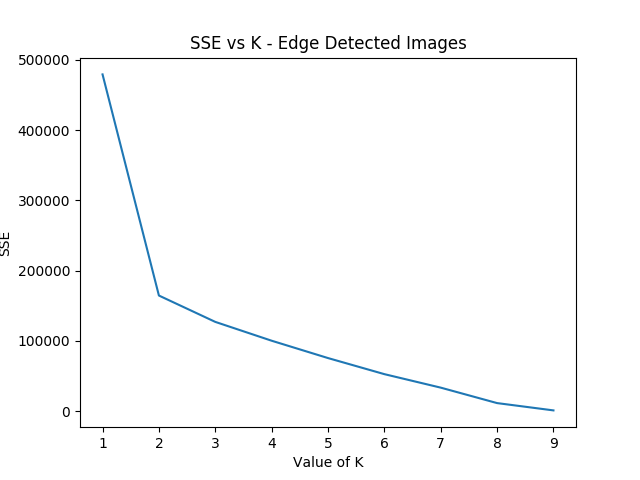

We can increment k starting from 1, and we can run each value of k a few times and pick the lowest variation clusters. We can loosely adapt the elbow method to select a value of K.6

Its using this framework in which we can apply k-means clustering as an ok means of sorting the images into visually similar groups.

Implementation

import random, math import numpy as np from joblib import Parallel, delayed import multiprocessing import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized # returns an integer representing distance between two points def find_distance(x,y): #use hamming distance if greater than R^10, otherwise use Euclidian Distance if len(x) > 10: return hamming(quantize(x), quantize(y)) else: #sqrt ( (a-x)^2 + (b-y)^2 + (c-z)^2 ... ) distance = 0 for origin, destination in zip(x,y): distance += (origin-destination)**2 return math.sqrt(distance) # A single attempt at clustering data into K points def kmeans_clustering(matrix, k): #init k random points points = random.sample(matrix, k) #placeholders oldclusters = [-1] clusters = [] for i in range(k): clusters.append([]) emptyclusters = clusters #loop until points don't change while(oldclusters != clusters): oldclusters = clusters clusters = emptyclusters #use space instead of time to avoid iterating to zero out every loop #group all data points to nearest point for x in matrix: distances = [] for y in points: distances.append(find_distance(x,y)) clusters[distances.index(min(distances))].append(x) #when all points are grouped, calculate new mean for each point for i, cluster in enumerate(clusters): if cluster: points[i] = map(np.mean, zip(*cluster)) return clusters # run K-means a few times, return clustering with minimum intracluster variance def cluster_given_K(matrix, k, n=25): clusterings = [] # run k-means a few times for x in range(n): clusterings.append(kmeans_clustering(matrix, k)) # calculate intracluster variance for each clustering ## this is just the sum of all distances from every point to it's cluster's center distances = [] for clustering in clusterings: variance = 0 for cluster in clustering: center = map(np.mean, zip(*cluster)) for point in cluster: variance += find_distance(point,center) distances.append(variance) return [clusterings[distances.index(min(distances))], min(distances)] # Loosely look for the elbow in the graph def elbowmethod(candidates, debug_flag=0): varscores = zip(*candidates)[1] #just for debug purposes if debug_flag == 1 return varscores percentages = map(lambda x: 1-(x/varscores[0]), varscores) elbowseek = [] for point in range(0,len(percentages)-1): if point is 0: elbowseek.append(0) elif point is len(percentages)-1: elbowseek.append(percentages[point]-percentages[point-1]) else: elbowseek.append((percentages[point]-percentages[point-1]) - \ (percentages[point+1]-percentages[point])) return elbowseek # Runs cluster_given_K multiple times, for each value of K def cluster(matrix, minK=1, maxK=-1, runs=50, debug_flag=0): if not matrix: return [] if maxK is -1: maxK = len(matrix) num_cores = multiprocessing.cpu_count() candidates = Parallel(n_jobs=num_cores)(delayed(cluster_given_K)(matrix, x, runs) for x in range(minK, maxK)) elbowseek = elbowmethod(candidates, debug_flag) if debug_flag == 1: return elbowseek, candidates, candidates[elbowseek.index(max(elbowseek))][0] return candidates[elbowseek.index(max(elbowseek))][0] def give_names(clustering, names, grid): ret = [] for x in clustering: ret_a = [] for y in x: ret_a.append(names[grid.index(y)]) ret.append(ret_a) return ret

import random, math import numpy as np from joblib import Parallel, delayed import multiprocessing import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized # returns an integer representing distance between two points def find_distance(x,y): #use hamming distance if greater than R^10, otherwise use Euclidian Distance if len(x) > 10: return hamming(quantize(x), quantize(y)) else: #sqrt ( (a-x)^2 + (b-y)^2 + (c-z)^2 ... ) distance = 0 for origin, destination in zip(x,y): distance += (origin-destination)**2 return math.sqrt(distance) # A single attempt at clustering data into K points def kmeans_clustering(matrix, k): #init k random points points = random.sample(matrix, k) #placeholders oldclusters = [-1] clusters = [] for i in range(k): clusters.append([]) emptyclusters = clusters #loop until points don't change while(oldclusters != clusters): oldclusters = clusters clusters = emptyclusters #use space instead of time to avoid iterating to zero out every loop #group all data points to nearest point for x in matrix: distances = [] for y in points: distances.append(find_distance(x,y)) clusters[distances.index(min(distances))].append(x) #when all points are grouped, calculate new mean for each point for i, cluster in enumerate(clusters): if cluster: points[i] = map(np.mean, zip(*cluster)) return clusters # run K-means a few times, return clustering with minimum intracluster variance def cluster_given_K(matrix, k, n=25): clusterings = [] # run k-means a few times for x in range(n): clusterings.append(kmeans_clustering(matrix, k)) # calculate intracluster variance for each clustering ## this is just the sum of all distances from every point to it's cluster's center distances = [] for clustering in clusterings: variance = 0 for cluster in clustering: center = map(np.mean, zip(*cluster)) for point in cluster: variance += find_distance(point,center) distances.append(variance) return [clusterings[distances.index(min(distances))], min(distances)] # Loosely look for the elbow in the graph def elbowmethod(candidates, debug_flag=0): varscores = zip(*candidates)[1] #just for debug purposes if debug_flag == 1 return varscores percentages = map(lambda x: 1-(x/varscores[0]), varscores) elbowseek = [] for point in range(0,len(percentages)-1): if point is 0: elbowseek.append(0) elif point is len(percentages)-1: elbowseek.append(percentages[point]-percentages[point-1]) else: elbowseek.append((percentages[point]-percentages[point-1]) - \ (percentages[point+1]-percentages[point])) return elbowseek # Runs cluster_given_K multiple times, for each value of K def cluster(matrix, minK=1, maxK=-1, runs=50, debug_flag=0): if not matrix: return [] if maxK is -1: maxK = len(matrix) num_cores = multiprocessing.cpu_count() candidates = Parallel(n_jobs=num_cores)(delayed(cluster_given_K)(matrix, x, runs) for x in range(minK, maxK)) elbowseek = elbowmethod(candidates, debug_flag) if debug_flag == 1: return elbowseek, candidates, candidates[elbowseek.index(max(elbowseek))][0] return candidates[elbowseek.index(max(elbowseek))][0] def give_names(clustering, names, grid): ret = [] for x in clustering: ret_a = [] for y in x: ret_a.append(names[grid.index(y)]) ret.append(ret_a) return ret return cluster([[1,1], [1,1], [1,0], [1,3], [10,12], [10,11], [10,10], [20,20], [22,20], [21,21]])

| (10 12) | (10 11) | (10 10) | |

| (1 1) | (1 1) | (1 0) | (1 3) |

| (20 20) | (22 20) | (21 21) |

Awesome, we have an implementation working now.

K-Means vs Canny Edge Detection

Just so we can see before running it on the full webcomic pruned to only include likely-clustered images, lets just see what we get if we run it on our 10 image dataset of canny edge-detected images.

import random, math import numpy as np from joblib import Parallel, delayed import multiprocessing import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized # returns an integer representing distance between two points def find_distance(x,y): #use hamming distance if greater than R^10, otherwise use Euclidian Distance if len(x) > 10: return hamming(quantize(x), quantize(y)) else: #sqrt ( (a-x)^2 + (b-y)^2 + (c-z)^2 ... ) distance = 0 for origin, destination in zip(x,y): distance += (origin-destination)**2 return math.sqrt(distance) # A single attempt at clustering data into K points def kmeans_clustering(matrix, k): #init k random points points = random.sample(matrix, k) #placeholders oldclusters = [-1] clusters = [] for i in range(k): clusters.append([]) emptyclusters = clusters #loop until points don't change while(oldclusters != clusters): oldclusters = clusters clusters = emptyclusters #use space instead of time to avoid iterating to zero out every loop #group all data points to nearest point for x in matrix: distances = [] for y in points: distances.append(find_distance(x,y)) clusters[distances.index(min(distances))].append(x) #when all points are grouped, calculate new mean for each point for i, cluster in enumerate(clusters): if cluster: points[i] = map(np.mean, zip(*cluster)) return clusters # run K-means a few times, return clustering with minimum intracluster variance def cluster_given_K(matrix, k, n=25): clusterings = [] # run k-means a few times for x in range(n): clusterings.append(kmeans_clustering(matrix, k)) # calculate intracluster variance for each clustering ## this is just the sum of all distances from every point to it's cluster's center distances = [] for clustering in clusterings: variance = 0 for cluster in clustering: center = map(np.mean, zip(*cluster)) for point in cluster: variance += find_distance(point,center) distances.append(variance) return [clusterings[distances.index(min(distances))], min(distances)] # Loosely look for the elbow in the graph def elbowmethod(candidates, debug_flag=0): varscores = zip(*candidates)[1] #just for debug purposes if debug_flag == 1 return varscores percentages = map(lambda x: 1-(x/varscores[0]), varscores) elbowseek = [] for point in range(0,len(percentages)-1): if point is 0: elbowseek.append(0) elif point is len(percentages)-1: elbowseek.append(percentages[point]-percentages[point-1]) else: elbowseek.append((percentages[point]-percentages[point-1]) - \ (percentages[point+1]-percentages[point])) return elbowseek # Runs cluster_given_K multiple times, for each value of K def cluster(matrix, minK=1, maxK=-1, runs=50, debug_flag=0): if not matrix: return [] if maxK is -1: maxK = len(matrix) num_cores = multiprocessing.cpu_count() candidates = Parallel(n_jobs=num_cores)(delayed(cluster_given_K)(matrix, x, runs) for x in range(minK, maxK)) elbowseek = elbowmethod(candidates, debug_flag) if debug_flag == 1: return elbowseek, candidates, candidates[elbowseek.index(max(elbowseek))][0] return candidates[elbowseek.index(max(elbowseek))][0] def give_names(clustering, names, grid): ret = [] for x in clustering: ret_a = [] for y in x: ret_a.append(names[grid.index(y)]) ret.append(ret_a) return ret import numpy as np import os from PIL import Image import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized def nxn_grid_from_itertools_combo(panels, full_list): # Create nxn grid such that x,y is a comparison between panel x and panel y # this is the format that you'd get if you did every comparison but we used itertools # be more efficient. Now that we need these comparisons in a matrix we need to convert it. grid = [] for image1 in panels: compare_img1 = [] for image2 in panels: if image1 == image2: compare_img1.append(0) else: val = [x[2] for x in full_list if ((x[0] == image1 and x[1] == image2) or \ (x[0] == image2 and x[1] == image2))] if val: compare_img1.append(val[0]) else: compare_img1.append(grid[panels.index(image2)][panels.index(image1)]) grid.append(compare_img1) return grid def filter_out_nonduplicates(directory, resize=True): ## Perform comparisons without duplicates full_list = hamming_a_directory(directory, resize) ## convert comparisons to an nxn grid, as if we had duplicates # Create list of panels panels = os.listdir(directory) # Create nxn grid such that x,y is a comparison between panel x and panel y nxn_grid = nxn_grid_from_itertools_combo(panels, full_list) # find mu and sigma of each panel compared to each other panel, filter out probable matches return filter_grid(nxn_grid) def filter_grid(grid): gmean = np.mean(grid) gstd = np.std(grid) filtered_grid = [] for i, panel in enumerate(grid): for x, comparison in enumerate(panel): flag = False if i != x: if comparison < (gmean-gstd): flag = True break if flag == True: filtered_grid.append(panel) return filtered_grid def move_directory(imgsrc, directory, filename): if not os.path.exists(directory): os.makedirs(directory) try: newfile = Image.open(imgsrc+filename) newfile.save(directory+filename) except: newfile = Image.open(imgsrc+filename[:-3]+"gif") newfile.save(directory+filename[:-3]+"gif") directory = './screens/canny/' full_list = hamming_a_directory(directory) panels = os.listdir(directory) grid = nxn_grid_from_itertools_combo(panels, full_list) return give_names(cluster(grid), panels, grid)

| 18282.png | ||||||||

| 15251.png | 15252.png | 15301.png | 23381.png | 24881.png | 10331.png | 18701.png | 20792.png | 10341.png |

Well, that's sort of funny; the elbow method yields k=2 here because 18282 is so noisy compared to all the other panels, which certainly makes enough sense. Let's see if we can force it to use at least three clusters.

import random, math import numpy as np from joblib import Parallel, delayed import multiprocessing import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized # returns an integer representing distance between two points def find_distance(x,y): #use hamming distance if greater than R^10, otherwise use Euclidian Distance if len(x) > 10: return hamming(quantize(x), quantize(y)) else: #sqrt ( (a-x)^2 + (b-y)^2 + (c-z)^2 ... ) distance = 0 for origin, destination in zip(x,y): distance += (origin-destination)**2 return math.sqrt(distance) # A single attempt at clustering data into K points def kmeans_clustering(matrix, k): #init k random points points = random.sample(matrix, k) #placeholders oldclusters = [-1] clusters = [] for i in range(k): clusters.append([]) emptyclusters = clusters #loop until points don't change while(oldclusters != clusters): oldclusters = clusters clusters = emptyclusters #use space instead of time to avoid iterating to zero out every loop #group all data points to nearest point for x in matrix: distances = [] for y in points: distances.append(find_distance(x,y)) clusters[distances.index(min(distances))].append(x) #when all points are grouped, calculate new mean for each point for i, cluster in enumerate(clusters): if cluster: points[i] = map(np.mean, zip(*cluster)) return clusters # run K-means a few times, return clustering with minimum intracluster variance def cluster_given_K(matrix, k, n=25): clusterings = [] # run k-means a few times for x in range(n): clusterings.append(kmeans_clustering(matrix, k)) # calculate intracluster variance for each clustering ## this is just the sum of all distances from every point to it's cluster's center distances = [] for clustering in clusterings: variance = 0 for cluster in clustering: center = map(np.mean, zip(*cluster)) for point in cluster: variance += find_distance(point,center) distances.append(variance) return [clusterings[distances.index(min(distances))], min(distances)] # Loosely look for the elbow in the graph def elbowmethod(candidates, debug_flag=0): varscores = zip(*candidates)[1] #just for debug purposes if debug_flag == 1 return varscores percentages = map(lambda x: 1-(x/varscores[0]), varscores) elbowseek = [] for point in range(0,len(percentages)-1): if point is 0: elbowseek.append(0) elif point is len(percentages)-1: elbowseek.append(percentages[point]-percentages[point-1]) else: elbowseek.append((percentages[point]-percentages[point-1]) - \ (percentages[point+1]-percentages[point])) return elbowseek # Runs cluster_given_K multiple times, for each value of K def cluster(matrix, minK=1, maxK=-1, runs=50, debug_flag=0): if not matrix: return [] if maxK is -1: maxK = len(matrix) num_cores = multiprocessing.cpu_count() candidates = Parallel(n_jobs=num_cores)(delayed(cluster_given_K)(matrix, x, runs) for x in range(minK, maxK)) elbowseek = elbowmethod(candidates, debug_flag) if debug_flag == 1: return elbowseek, candidates, candidates[elbowseek.index(max(elbowseek))][0] return candidates[elbowseek.index(max(elbowseek))][0] def give_names(clustering, names, grid): ret = [] for x in clustering: ret_a = [] for y in x: ret_a.append(names[grid.index(y)]) ret.append(ret_a) return ret import numpy as np import os from PIL import Image import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized def nxn_grid_from_itertools_combo(panels, full_list): # Create nxn grid such that x,y is a comparison between panel x and panel y # this is the format that you'd get if you did every comparison but we used itertools # be more efficient. Now that we need these comparisons in a matrix we need to convert it. grid = [] for image1 in panels: compare_img1 = [] for image2 in panels: if image1 == image2: compare_img1.append(0) else: val = [x[2] for x in full_list if ((x[0] == image1 and x[1] == image2) or \ (x[0] == image2 and x[1] == image2))] if val: compare_img1.append(val[0]) else: compare_img1.append(grid[panels.index(image2)][panels.index(image1)]) grid.append(compare_img1) return grid def filter_out_nonduplicates(directory, resize=True): ## Perform comparisons without duplicates full_list = hamming_a_directory(directory, resize) ## convert comparisons to an nxn grid, as if we had duplicates # Create list of panels panels = os.listdir(directory) # Create nxn grid such that x,y is a comparison between panel x and panel y nxn_grid = nxn_grid_from_itertools_combo(panels, full_list) # find mu and sigma of each panel compared to each other panel, filter out probable matches return filter_grid(nxn_grid) def filter_grid(grid): gmean = np.mean(grid) gstd = np.std(grid) filtered_grid = [] for i, panel in enumerate(grid): for x, comparison in enumerate(panel): flag = False if i != x: if comparison < (gmean-gstd): flag = True break if flag == True: filtered_grid.append(panel) return filtered_grid def move_directory(imgsrc, directory, filename): if not os.path.exists(directory): os.makedirs(directory) try: newfile = Image.open(imgsrc+filename) newfile.save(directory+filename) except: newfile = Image.open(imgsrc+filename[:-3]+"gif") newfile.save(directory+filename[:-3]+"gif") directory = './screens/canny/' full_list = hamming_a_directory(directory) panels = os.listdir(directory) grid = nxn_grid_from_itertools_combo(panels, full_list) return give_names(cluster(grid, 3), panels, grid)

| 10331.png | 10341.png |

| 23381.png | 20792.png |

| 15251.png | |

| 18282.png | |

| 24881.png | |

| 15252.png | |

| 18701.png | |

| 15301.png |

That's better.

Let's run it on the pruned list real fast just to make sure the implementation works the full way through.

import random, math import numpy as np from joblib import Parallel, delayed import multiprocessing import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized # returns an integer representing distance between two points def find_distance(x,y): #use hamming distance if greater than R^10, otherwise use Euclidian Distance if len(x) > 10: return hamming(quantize(x), quantize(y)) else: #sqrt ( (a-x)^2 + (b-y)^2 + (c-z)^2 ... ) distance = 0 for origin, destination in zip(x,y): distance += (origin-destination)**2 return math.sqrt(distance) # A single attempt at clustering data into K points def kmeans_clustering(matrix, k): #init k random points points = random.sample(matrix, k) #placeholders oldclusters = [-1] clusters = [] for i in range(k): clusters.append([]) emptyclusters = clusters #loop until points don't change while(oldclusters != clusters): oldclusters = clusters clusters = emptyclusters #use space instead of time to avoid iterating to zero out every loop #group all data points to nearest point for x in matrix: distances = [] for y in points: distances.append(find_distance(x,y)) clusters[distances.index(min(distances))].append(x) #when all points are grouped, calculate new mean for each point for i, cluster in enumerate(clusters): if cluster: points[i] = map(np.mean, zip(*cluster)) return clusters # run K-means a few times, return clustering with minimum intracluster variance def cluster_given_K(matrix, k, n=25): clusterings = [] # run k-means a few times for x in range(n): clusterings.append(kmeans_clustering(matrix, k)) # calculate intracluster variance for each clustering ## this is just the sum of all distances from every point to it's cluster's center distances = [] for clustering in clusterings: variance = 0 for cluster in clustering: center = map(np.mean, zip(*cluster)) for point in cluster: variance += find_distance(point,center) distances.append(variance) return [clusterings[distances.index(min(distances))], min(distances)] # Loosely look for the elbow in the graph def elbowmethod(candidates, debug_flag=0): varscores = zip(*candidates)[1] #just for debug purposes if debug_flag == 1 return varscores percentages = map(lambda x: 1-(x/varscores[0]), varscores) elbowseek = [] for point in range(0,len(percentages)-1): if point is 0: elbowseek.append(0) elif point is len(percentages)-1: elbowseek.append(percentages[point]-percentages[point-1]) else: elbowseek.append((percentages[point]-percentages[point-1]) - \ (percentages[point+1]-percentages[point])) return elbowseek # Runs cluster_given_K multiple times, for each value of K def cluster(matrix, minK=1, maxK=-1, runs=50, debug_flag=0): if not matrix: return [] if maxK is -1: maxK = len(matrix) num_cores = multiprocessing.cpu_count() candidates = Parallel(n_jobs=num_cores)(delayed(cluster_given_K)(matrix, x, runs) for x in range(minK, maxK)) elbowseek = elbowmethod(candidates, debug_flag) if debug_flag == 1: return elbowseek, candidates, candidates[elbowseek.index(max(elbowseek))][0] return candidates[elbowseek.index(max(elbowseek))][0] def give_names(clustering, names, grid): ret = [] for x in clustering: ret_a = [] for y in x: ret_a.append(names[grid.index(y)]) ret.append(ret_a) return ret import numpy as np import os from PIL import Image import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized def nxn_grid_from_itertools_combo(panels, full_list): # Create nxn grid such that x,y is a comparison between panel x and panel y # this is the format that you'd get if you did every comparison but we used itertools # be more efficient. Now that we need these comparisons in a matrix we need to convert it. grid = [] for image1 in panels: compare_img1 = [] for image2 in panels: if image1 == image2: compare_img1.append(0) else: val = [x[2] for x in full_list if ((x[0] == image1 and x[1] == image2) or \ (x[0] == image2 and x[1] == image2))] if val: compare_img1.append(val[0]) else: compare_img1.append(grid[panels.index(image2)][panels.index(image1)]) grid.append(compare_img1) return grid def filter_out_nonduplicates(directory, resize=True): ## Perform comparisons without duplicates full_list = hamming_a_directory(directory, resize) ## convert comparisons to an nxn grid, as if we had duplicates # Create list of panels panels = os.listdir(directory) # Create nxn grid such that x,y is a comparison between panel x and panel y nxn_grid = nxn_grid_from_itertools_combo(panels, full_list) # find mu and sigma of each panel compared to each other panel, filter out probable matches return filter_grid(nxn_grid) def filter_grid(grid): gmean = np.mean(grid) gstd = np.std(grid) filtered_grid = [] for i, panel in enumerate(grid): for x, comparison in enumerate(panel): flag = False if i != x: if comparison < (gmean-gstd): flag = True break if flag == True: filtered_grid.append(panel) return filtered_grid def move_directory(imgsrc, directory, filename): if not os.path.exists(directory): os.makedirs(directory) try: newfile = Image.open(imgsrc+filename) newfile.save(directory+filename) except: newfile = Image.open(imgsrc+filename[:-3]+"gif") newfile.save(directory+filename[:-3]+"gif") directory = './screens/canny/' ham = filter_out_nonduplicates(directory) return give_names(cluster(ham[2]), ham[0], ham[2])

| 10331.png | 10341.png |

| 23381.png | 20792.png |

K-Means vs Perceptual Hashes of Images

Something funny I'm noticing is that the elbow method fails terribly for such a small subset of the hash images, but the clustering is pretty solid if you have a value for K determined already. Here's what it wants to spit out normally:

import random, math import numpy as np from joblib import Parallel, delayed import multiprocessing import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized # returns an integer representing distance between two points def find_distance(x,y): #use hamming distance if greater than R^10, otherwise use Euclidian Distance if len(x) > 10: return hamming(quantize(x), quantize(y)) else: #sqrt ( (a-x)^2 + (b-y)^2 + (c-z)^2 ... ) distance = 0 for origin, destination in zip(x,y): distance += (origin-destination)**2 return math.sqrt(distance) # A single attempt at clustering data into K points def kmeans_clustering(matrix, k): #init k random points points = random.sample(matrix, k) #placeholders oldclusters = [-1] clusters = [] for i in range(k): clusters.append([]) emptyclusters = clusters #loop until points don't change while(oldclusters != clusters): oldclusters = clusters clusters = emptyclusters #use space instead of time to avoid iterating to zero out every loop #group all data points to nearest point for x in matrix: distances = [] for y in points: distances.append(find_distance(x,y)) clusters[distances.index(min(distances))].append(x) #when all points are grouped, calculate new mean for each point for i, cluster in enumerate(clusters): if cluster: points[i] = map(np.mean, zip(*cluster)) return clusters # run K-means a few times, return clustering with minimum intracluster variance def cluster_given_K(matrix, k, n=25): clusterings = [] # run k-means a few times for x in range(n): clusterings.append(kmeans_clustering(matrix, k)) # calculate intracluster variance for each clustering ## this is just the sum of all distances from every point to it's cluster's center distances = [] for clustering in clusterings: variance = 0 for cluster in clustering: center = map(np.mean, zip(*cluster)) for point in cluster: variance += find_distance(point,center) distances.append(variance) return [clusterings[distances.index(min(distances))], min(distances)] # Loosely look for the elbow in the graph def elbowmethod(candidates, debug_flag=0): varscores = zip(*candidates)[1] #just for debug purposes if debug_flag == 1 return varscores percentages = map(lambda x: 1-(x/varscores[0]), varscores) elbowseek = [] for point in range(0,len(percentages)-1): if point is 0: elbowseek.append(0) elif point is len(percentages)-1: elbowseek.append(percentages[point]-percentages[point-1]) else: elbowseek.append((percentages[point]-percentages[point-1]) - \ (percentages[point+1]-percentages[point])) return elbowseek # Runs cluster_given_K multiple times, for each value of K def cluster(matrix, minK=1, maxK=-1, runs=50, debug_flag=0): if not matrix: return [] if maxK is -1: maxK = len(matrix) num_cores = multiprocessing.cpu_count() candidates = Parallel(n_jobs=num_cores)(delayed(cluster_given_K)(matrix, x, runs) for x in range(minK, maxK)) elbowseek = elbowmethod(candidates, debug_flag) if debug_flag == 1: return elbowseek, candidates, candidates[elbowseek.index(max(elbowseek))][0] return candidates[elbowseek.index(max(elbowseek))][0] def give_names(clustering, names, grid): ret = [] for x in clustering: ret_a = [] for y in x: ret_a.append(names[grid.index(y)]) ret.append(ret_a) return ret import numpy as np import os from PIL import Image import PIL from PIL import Image import io, itertools, os from joblib import Parallel, delayed import multiprocessing import numpy as np def hamming(x, y): if len(x) == len(y): #Choosing the distance between the image or the image's inverse, whichever is closer return min(sum(c1 != c2 for c1, c2 in zip(x, y)), sum(c1 == c2 for c1, c2 in zip(x, y))) else: return -1 def compare_img(image1, image2, dire, resize): i1 = Image.open(dire + image1) if resize: i1 = i1.resize((100,100)) i1_b = i1.tobytes() i2 = Image.open(dire + image2) if resize: i2 = i2.resize((100,100)) i2_b = i2.tobytes() dist = hamming(i1_b, i2_b) return dist #including here a helper function so I can call a function in parallel def output_format(image1, image2, dire, resize): return [image1, image2, compare_img(image1, image2, dire, resize)] def hamming_a_directory(dire, resize=True): num_cores = multiprocessing.cpu_count() return Parallel(n_jobs=num_cores)(delayed(output_format)(image1, image2, dire, resize)\ for image1, image2 in itertools.combinations(os.listdir(dire), 2)) def quantize(img_arr, dimx=8, dimy=8): quantized = [] for x in img_arr: if x >= np.mean(img_arr): quantized.append(255) else: quantized.append(0) return quantized def nxn_grid_from_itertools_combo(panels, full_list): # Create nxn grid such that x,y is a comparison between panel x and panel y # this is the format that you'd get if you did every comparison but we used itertools # be more efficient. Now that we need these comparisons in a matrix we need to convert it. grid = [] for image1 in panels: compare_img1 = [] for image2 in panels: if image1 == image2: compare_img1.append(0) else: val = [x[2] for x in full_list if ((x[0] == image1 and x[1] == image2) or \ (x[0] == image2 and x[1] == image2))] if val: compare_img1.append(val[0]) else: compare_img1.append(grid[panels.index(image2)][panels.index(image1)]) grid.append(compare_img1) return grid def filter_out_nonduplicates(directory, resize=True): ## Perform comparisons without duplicates full_list = hamming_a_directory(directory, resize) ## convert comparisons to an nxn grid, as if we had duplicates # Create list of panels panels = os.listdir(directory) # Create nxn grid such that x,y is a comparison between panel x and panel y nxn_grid = nxn_grid_from_itertools_combo(panels, full_list) # find mu and sigma of each panel compared to each other panel, filter out probable matches return filter_grid(nxn_grid) def filter_grid(grid): gmean = np.mean(grid) gstd = np.std(grid) filtered_grid = [] for i, panel in enumerate(grid): for x, comparison in enumerate(panel): flag = False if i != x: if comparison < (gmean-gstd): flag = True break if flag == True: filtered_grid.append(panel) return filtered_grid def move_directory(imgsrc, directory, filename): if not os.path.exists(directory): os.makedirs(directory) try: newfile = Image.open(imgsrc+filename) newfile.save(directory+filename) except: newfile = Image.open(imgsrc+filename[:-3]+"gif") newfile.save(directory+filename[:-3]+"gif") directory = './screens/phash/' ham = filter_out_nonduplicates(directory, False) return give_names(cluster(ham[2]), ham[0], ham[2])

| 15251.png | 15252.png | 15301.png | 23381.png | 24881.png | 20792.png | 18282.png |

| 10331.png | 18701.png | 10341.png |

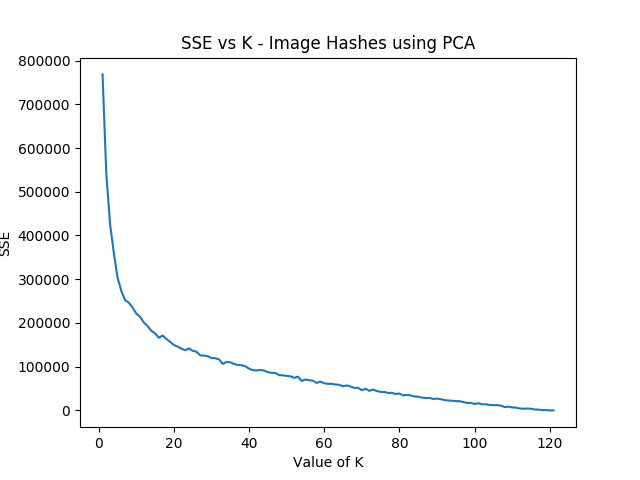

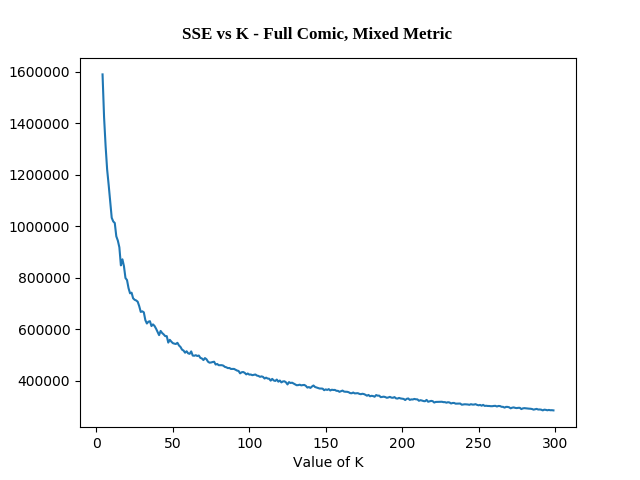

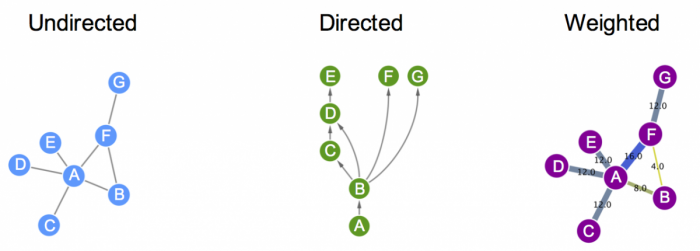

Yuck! Here's the same code but with a narrow range of k-values already selected: